Intelligent Space

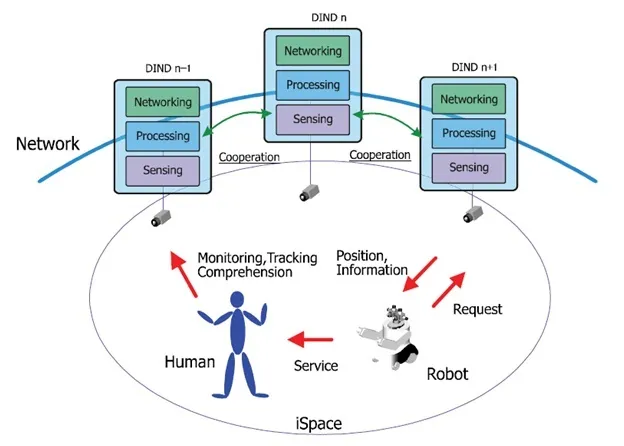

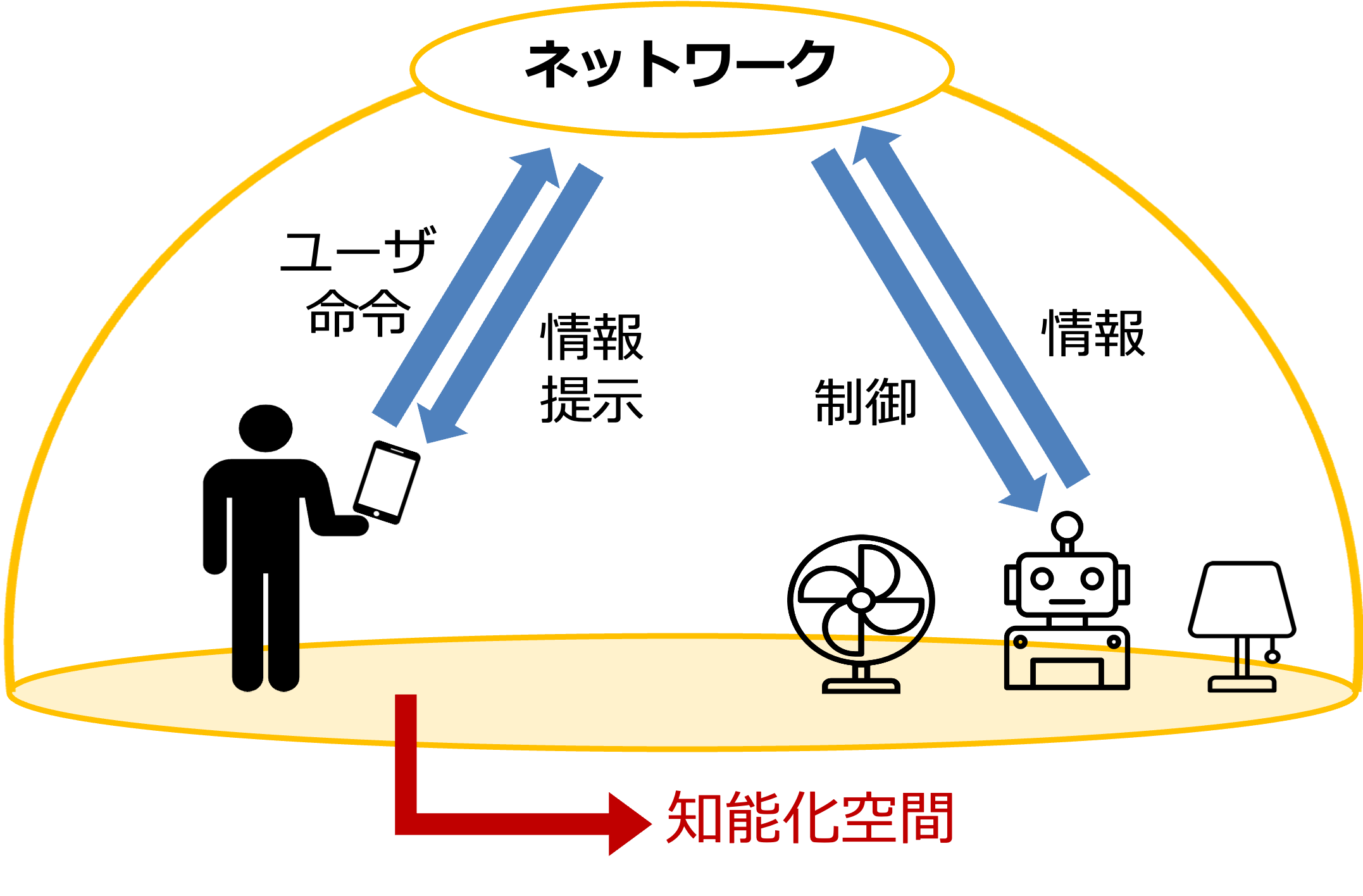

The Intelligent Space (iSpace) is a space that can analyze human behavior through computer vision and present user-centric information, and autonomously control robots as users intend. This study aims to support humans and robots to enhance their performances informatively and physically. To develop such a smart space, iSpace needs to know “when, where, who did what”. Therefore, iSpace is a research theme that requires various fundamental technologies and functions, and it encompasses a wide range of subtopics.

iSpace Coding

To achieve iSpace, it is essential to create environments that are convenient and comfortable for users. However, the functions required in such spaces vary from user to user. This is where the concept of “iSpace coding” becomes important—a technology that allows users to freely define the behaviors of robots and appliances according to their individual needs. iSpace coding enables anyone to easily configure the functions of the space using intuitive input methods, such as dedicated interfaces or natural language. As a result, even general users can freely build iSpace tailored to their preferences.

Real-time Person Re-identification in Multi-Camera Environment

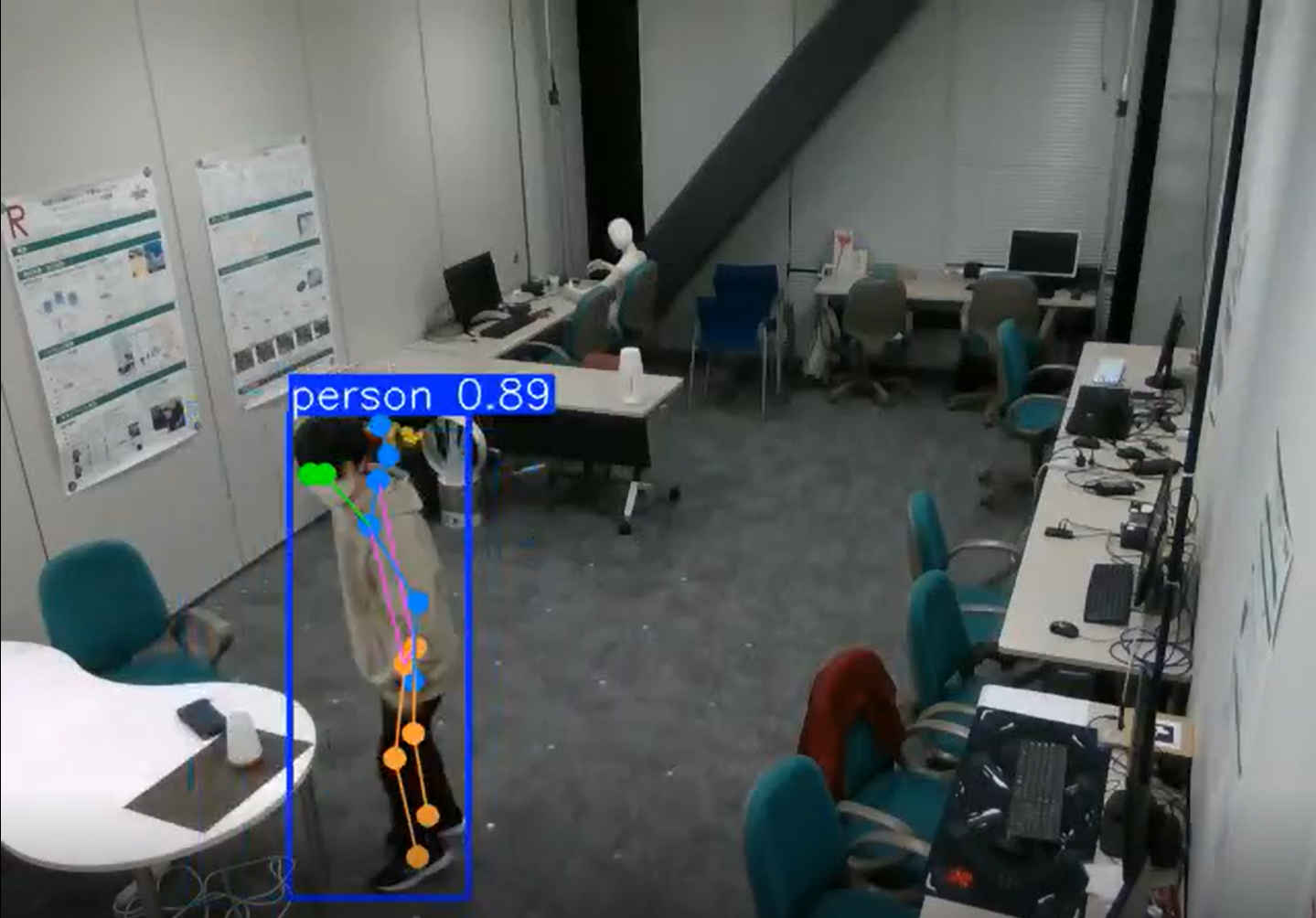

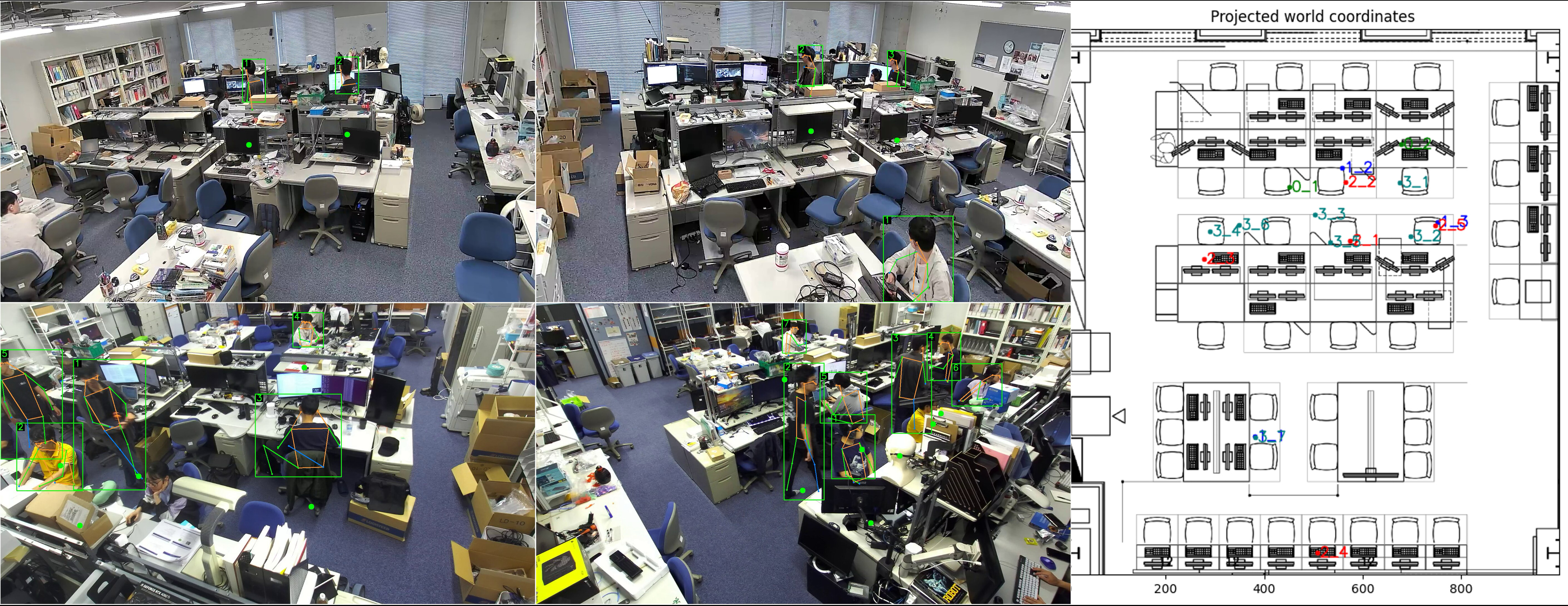

We have developed a system capable of detecting and tracking people across different cameras in a room. For each camera, deep learning is used to detect the position and skeleton pose of each person.

Then, our system perform multi-camera tracking by generating a single ID number for each person across different cameras. All of these are performed in real-time speed using a single consumer GPU, making it suitable to be deployed for applications.

Development of an information-sharing platform

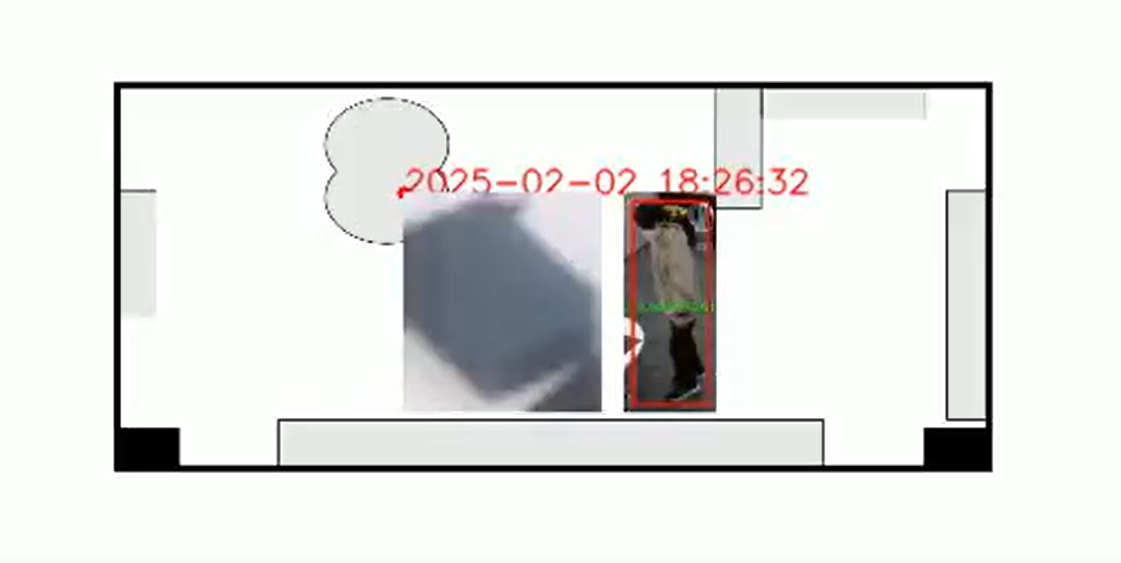

This research focuses on developing a platform that integrates data from multiple cameras installed in iSpace into a unified map, enabling information sharing with robots and various applications.

The platform accumulates and visualizes the positions and movement histories of people within the space, allowing for real-time human tracking. This makes it possible to provide context-aware services based on behavior patterns—for example, offering assistance only to individuals who have been working in a specific area for an extended period.

Detection of Left-Behind Objects

This study aims to develop a system to prevent forgotten items. Forgetting things is a common issue in daily life, often causing emotional distress, financial loss, and anxiety. Many people especially feel uneasy when leaving for work or school, wondering if they have forgotten something.

Our system detects forgotten items by tracking a person’s interaction with objects—such as touching, moving, or leaving them—using cameras to understand the final location of each item. It also logs the type of object and the person who handled it, enabling timely notifications to the user when necessary. In the future, we aim to incorporate the user’s schedule to predict and notify them in advance about items they are likely to forget, as well as provide intuitive alerts. This technology also holds potential for applications in other fields, such as caregiving and security.

Interaction System Using Mobile Devices

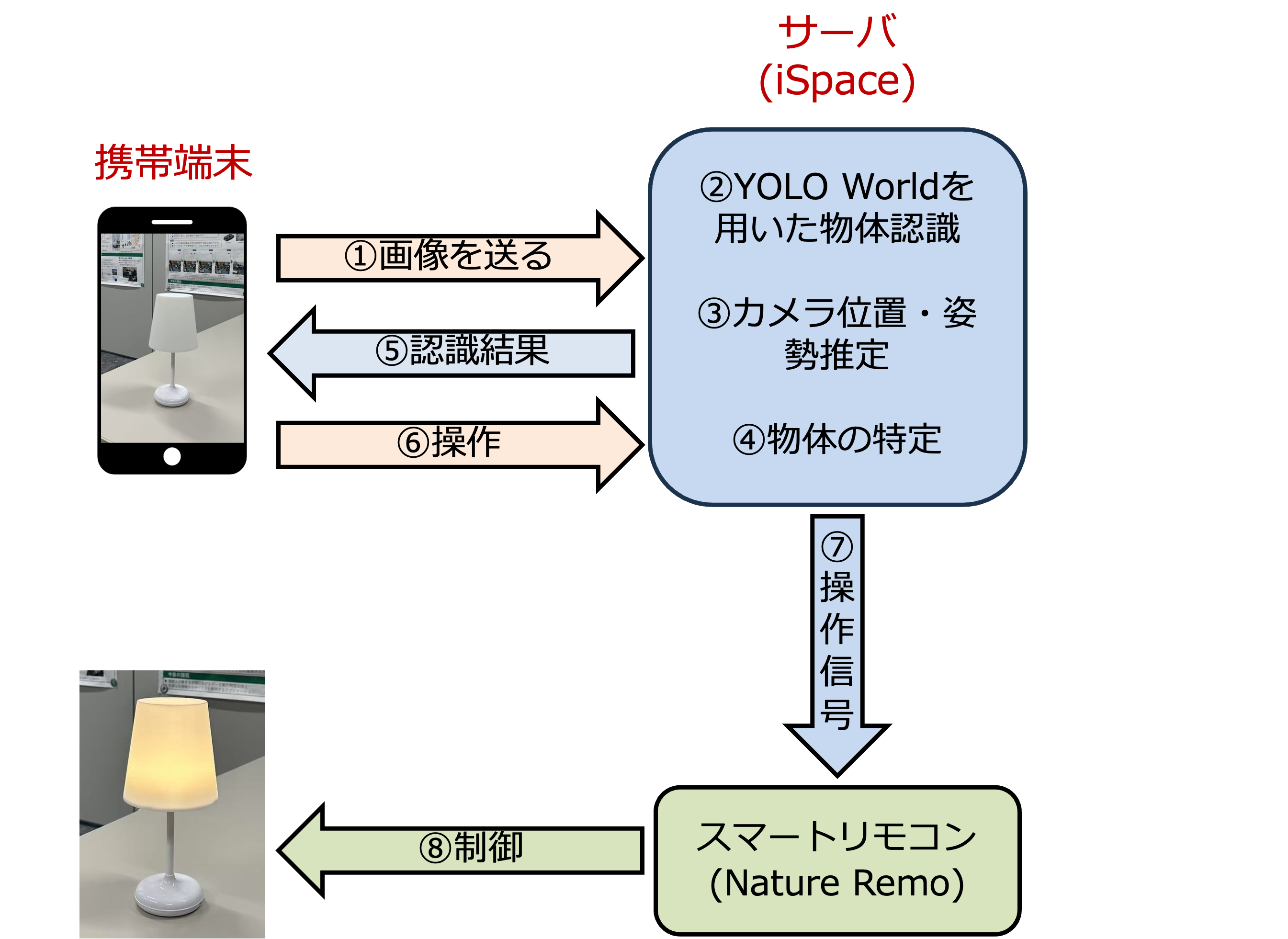

In this study, we propose an interaction system that enables users to intuitively interact with devices in iSpace using mobile devices such as smartphones. When the user points their smartphone camera at a device, a corresponding control interface is displayed on the screen, allowing direct operation.

A previously proposed system called R-Fii required pre-trained data to operate devices, and it faced challenges in distinguishing between multiple instances of the same type of object.

To address this issue, our system incorporates “YOLO-World,” a state-of-the-art object recognition model that works without the need for pre-training on specific objects. By leveraging the smartphone camera’s positional and orientation (pose) information, the system can accurately differentiate between objects of the same type.

Furniture Repositioning System

As part of research on intelligent space, this study focuses on developing a system that optimizes the arrangement of indoor objects according to the current situation. During everyday use, furniture in a room may shift from its intended position. This research specifically targets chairs, which tend to be moved frequently, with the goal of developing a system that can correct their positions in real time.

To achieve this, the system uses cameras installed in the space to detect the current positions of chairs and compares them with their predefined locations to determine whether any displacement has occurred. When a deviation is detected, a robot receives the positional information of the chair and returns it to its original position.